Our software runs a completely autonomous moving domain simulation that works on a wide range of geometries, including complex lattice designs. For each part, we automatically generate an adaptively refined tetrahedron mesh to reduce computation cost while ensuring we have an appropriate level of detail needed for both the physics and to appropriately describe the topology of the input geometry.

A single support generation request entails about 700 finite element analyses on average and typically takes about 1.5 hours to finish.

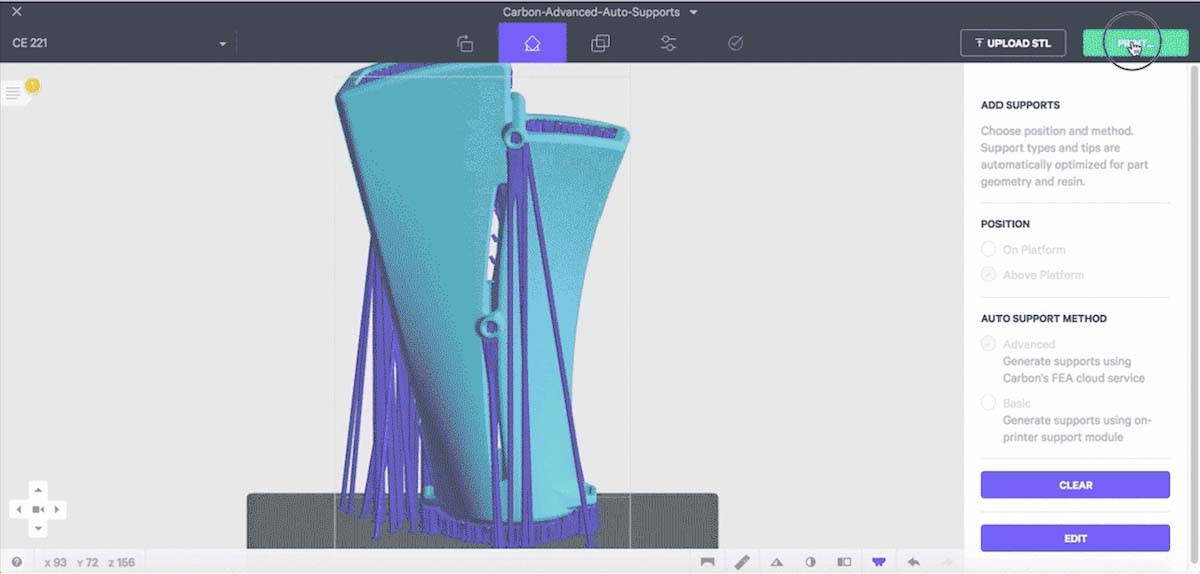

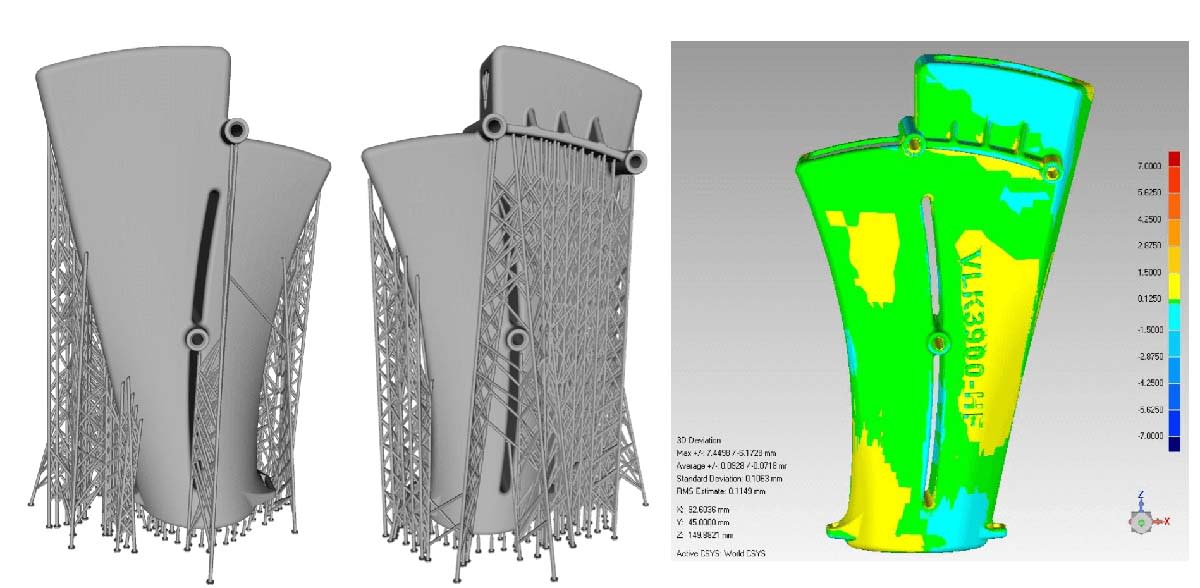

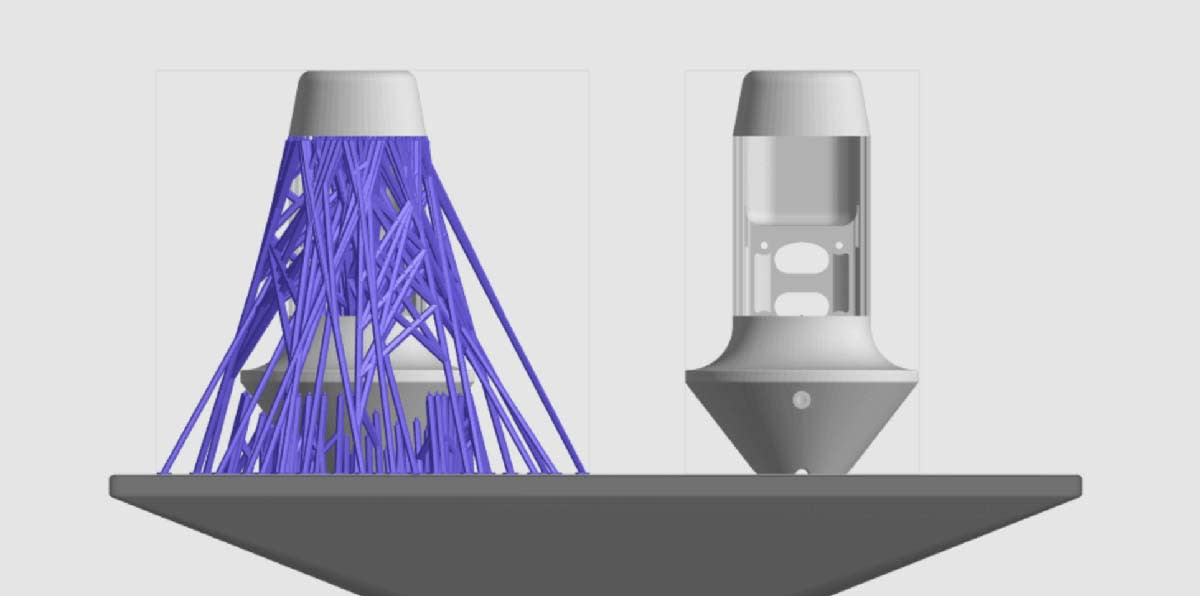

As an example, here’s a part designed by Carbon’s mechanical design team; it’s shown with and without supports:

Here are some additional noteworthy points about our simulation tools:

- Our finite element analysis code has been built at Carbon from scratch. We do use the widely utilized libraries like PETSc to perform linear algebra as needed.

- We use a data centric approach to determine which linear solver to use for a given system of linear equations to be solved for a single FEA (direct/iterative vs. serial/MPI).

- The analysis entails performing elastostatics simulations for our DLS process. At each slice/layer liquid is transformed into solid, and the solid domain grows through the print.

- Moving domain simulations can be particularly challenging and costly, as they require generating a tetrahedral mesh that conforms to the part at any stage of the print.

- We tackle this problem by generating a tetrahedral mesh for the whole object just once and then adapting the mesh to a given slice layer based on the Universal Meshes mesh adaptation algorithm.

- This approach allows us to find the locations on the geometry that will go through large deformations or will sustain large stress that may affect the final shape or the mechanical properties of the part – e.g., the yellow/red regions in the animation below:

- We continue to invest towards investigating and implementing robust algorithms to further increase the accuracy of our process simulation. By making such simulation tools available to our customers in easy-to-use ways, we’re confident we can continue to increase both the quality and rate of production of parts designed for our process.

AWS FLEET

Most of our automatic part design and simulation tools are solving very computation-heavy problems, which makes it important to both have access to significant computing power and to use it efficiently. Because we develop our software in house, we have the ability to optimize every aspect of the software architecture to make efficient use of currently available resources:

- To be scalable and flexible to varying workloads, we utilize Amazon Web Services extensively. We automatically resize our fleet of virtual machines within seconds after our computation needs change. This lets us quickly scale up to perform large computations and scale down to avoid wasted resources when possible.

- To further reduce cost, we opportunistically rent virtual machines on the Amazon spot market instead of using the more expensive on-demand instances.

- Most of our computations are performed by efficient and mature high-performance computing tools like MPI. They are great at performing large computations fast and efficiently but are typically not designed for fault tolerance, which makes them challenging to run on a relatively unreliable fleet of machines. Although we have made significant progress towards making MPI-based linear algebra algorithms run successfully and reliably on AWS, we still have more work ahead.

- By designing our software from the ground up for this environment, we’ve achieved a combination that is flexible, cost-effective, reliable, and fast.